Reducing Amazon Aurora Costs

Has AWS Aurora’s complexities and cost calculation challenges got you down? You are not alone. From blog articles to Reddit, developers are trying to understand how to reduce their Amazon Aurora costs. Learn how to rein them back in with these tips.

The Benefits of Amazon Aurora

Amazon’s Aurora is one of many of their “database as a service” offerings. It carries many of the same features of RDS but with improvements and features to the underlying engines provided by Amazon Web Services. For example, if a database often sees spiky or unpredictable loads, leveraging the Aurora Serverless might be a good idea. Aurora is also backed by highly reliable and available storage that is automatically replicated across multiple availability zones in a region.

Aurora supports numerous typical features you’d expect from a DBaaS, such as backups, snapshots, and patching automation. The benefit of Aurora comes from the additional features that can be enabled. Global Database for low-latency multi-region replicas, storage autoscaling, and Backtracking if using MySQL.

The Challenge With Aurora Costs

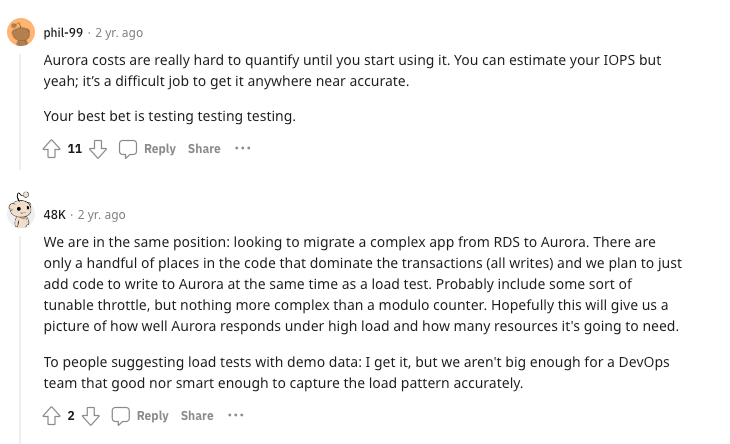

We’ve covered how to accurately estimate costs when using AWS Aurora already. It’s complicated, especially when first starting to use the service. The complexity increases when using service add-ons like Backtrack or Global Databases.

Even if just using Aurora without any of the add-ons, a spike in data transfer or an unexpected amount of traffic from the database may unexpectedly raise costs due to how data transfer costs are calculated.

Strategies for Reducing Your Aurora Costs

Get visibility into the problem

Before you can reduce costs for Aurora, you need to understand how the database is being used. Is the database using all of the storage that’s allocated to it? Is it right-sized? If the database frequently doesn’t use much CPU or memory, consider reducing the instance size.

Be aware that while storage can autoscale up, storage usage can usually be reduced dynamically by the Aurora engine. This is not possible with some earlier versions of Aurora MySQL; rebuilding the database on these versions is the only solution at this time. It’s worth noting that deleting rows won’t trigger the scaling down either; the use of pg_repack for PostgreSQL or OPTIMIZE TABLEFor for MySQL is needed. More details can be found in the AWS documentation for Storage Autoscaling.

Beyond optimizing and tuning based on observed performance, billing alarms should be established so a surprise invoice isn’t received at the end of the billing cycle. These alarms are robust and can alarm based on actual or forecasted usage.

Optimize your non-production environments

When running Aurora, you can optimize non-production environments in a few ways. The easiest is to ensure you size the instance appropriately. For example, running a test or integration environment with a database the same size as the production environment is usually not required and ends up with a severely underutilized database instance that costs a lot of money.

One of the best ways is by running Aurora Serverless, which automatically adjusts to load. It can be shut down almost fully and re-provisioned very quickly when it is needed again.

If Aurora Serverless isn’t an option for the application, consider using scheduled jobs with Amazon Systems Manager to shut down the non-production environments during non-working hours.

On the topic of shutting resources down, take stock of the non-production environments from time to time to terminate any resources that are no longer required to be running or have served their purposes.

Use the correct type of I/O access for your use case

Using the correct type of I/O for your database is another good optimization strategy. Setting alerts on the IO:DataFileRead and IO:DataFilePrefect metrics in CloudWatch are great ways to monitor I/O costs. You can also use these metrics as evidence to consider moving to (or away) from I/O Optimized Aurora storage.

Consider I/O Optimized storage if 25 % or more of your database spend is on I/O. You should monitor the database metrics closely and switch back to normal storage if you find that the I/O is not as consistently high anymore. This option can be toggled at any time.

Switch from Serverless to provisioned in production

As mentioned above, Aurora Serverless is a great option for environments that don’t always need to be on. If the database load in production is bursty or unpredictable, Aurora Serverless may be a good option, but typically, production environments need an always-on database with a minimum level of performance. Serverless is not a cost-friendly option when needing an always-on database since, for the same resources, Aurora Serverless is more costly than a provisioned database.

A great example of this is needing four Serverless v2 ACUs worth of resources at all times. For a similarly sized database on-demand, it would be 70 % less expensive to use a t4g.large instance.

Right-size your provisioned instance types

When starting out using Aurora, you may not have the best idea of what resource sizing is best for your workload. Using CloudWatch and Enhanced Monitoring, you can keep a close eye on important metrics that will tell you when it may be time to scale up or down.

A rule of thumb is if the Aurora database is using less than 30 % CPU or I/O, it is probably over-provisioned. Ensure you routinely check these metrics to keep your database costs in line with the actual resource requirements.

Once a database size has been chosen and seems to have stabilized, reserved instances can be purchased for up to a 40 % discount over on-demand instances.

Trim your backups

With automated backups and snapshots, it’s easy to be surprised by a large invoice at the end of the month. It’s a good idea to think about the backup strategy early. Ask if you really need the full 35 days of snapshots for your integration or test environments.

For the environments where backups are needed, you can export your snapshots to S3 for a low-cost, reliable means of storage for these backups. It’s important to note that these are exported as Apache Parquet files, which is great since that format compresses really well, but some data type changes occur depending on the database engine.

If you export snapshots to S3, also remember to clean them up in the RDS console to stop incurring those costs.

Offload large objects to Amazon S3

Between large objects and TOAST, PostgreSQL makes it easy to load large data into a database. Aurora’s fast and reliable storage layer makes this even easier. But with storage autoscaling, it is also really simple to bloat the database. The autoscaling may not necessarily scale down without intervention either, so one consideration should be where to store these large data files. One option is to store a pointer to S3, which is significantly less expensive than storing it in the database.

Timescale takes this one step further with tiered storage. Tiered storage abstracts the complication of needing to worry about S3 permissions and lifecycle management by handling that automatically. It also ends up less expensive than S3: $0.021 per GB/month versus the average $0.023 per GB/month that S3 charges.

Optimize your SQL queries

Of course, one of the most important factors in reducing Aurora's costs is in the realm of normal database maintenance. Optimizing SQL queries can have an incredible impact on the database resources used. When determining instance size, having a number of poorly optimized queries or malformed indexes can make it appear that the database is operating at a higher utilization than it may actually need to be.

Take advantage of EXPLAIN and Amazon’s Performance Insights to find troublesome queries and create indexes or partitions to improve performance.

Controlling Costs for Time-Series Projects

If you use Aurora for time-series data, Timescale provides a cost-effective alternative. Timescale is fully PostgreSQL-compatible with enhancements to make time-series data calculations easier and less expensive. As noted above, in many cases, Timescale provides better options for normal PostgreSQL use cases with a low-cost, infinite scalability storage tier so you can tier your infrequently accessed data (while still being able to query it) and usage-based storage costs.

And then, if you’re using Aurora Serverless, there is this:

Check out our Aurora Serverless benchmark and see for yourself. You can try Timescale today, free for 30 days.